Embodied Cognition for Human-Robot Interactions

Research on natural and socially acceptable human-robot interaction.

The main research focus of the Embodied Cognition for Human-Robot Interaction (ECHOS) group is on research in human-robot interaction (HRI), publishing research articles in high quality international journals and conferences. The group follows a holistic approach to HRI, investigating embedded cognition for natural, multimodal HRI, socially acceptable behaviours of robots in HRI use cases, and rigorous user study evaluation of HRI systems.

The research methodology of the group is to research and implement the robotic architectures and their necessary building blocks to enable robots to interact with humans in a natural, socially acceptable way. These architectures are then evaluated in scientifically sound user studies. The group has a strong focus on HRI application areas in which robots are used for the common good. This includes for example ethically safe physio-therapy robots, tele-operation of robots used for nuclear decommissioning, collaborative robots in industrial scenarios, and robots in care homes.

Current Research Topics

Social Robots for Health

How could (and should) a social robot be used to help improve exercise motivation?

Studying how physiotherapists work with their patients has given some insight and suggests that the social presence of a robot like Pepper could make a difference. Persuasive social influence strategies designed to generate rapport also seem to work when using Pepper as an exercise instructor.

Bringing these ideas together, human-supervised machine learning will be used to generate appropriate, personalised, autonomous engagement behaviours in an ambitious study during which Pepper will be used to coach people through the 9 week 9 NHS running programme.

Contact: Katie Winkle

Kinematics and Social Interaction

Funny? Bored? Aggressive?

Could a robot understand the state of a social interaction (and thus, adapt its behaviour appropriately) by only analysing the silhouette and general pose of the people participating?

We investigate this question by first looking at how humans do it, and using data mining and machine learning to uncover the underlying constructs of social interaction.

Contact: Séverin Lemaignan, Madeleine Bartlett (University of Plymouth)

RoboPets

Jointly developed with Louis Rice (UWE's department of Architecture), the RoboPets project explores how small robots (with character!) might integrate into our daily environment.

The project will see four small Vector robots invade one of the campus' café during Summer 2019, and evolve their own personalities by interacting with staff and students.

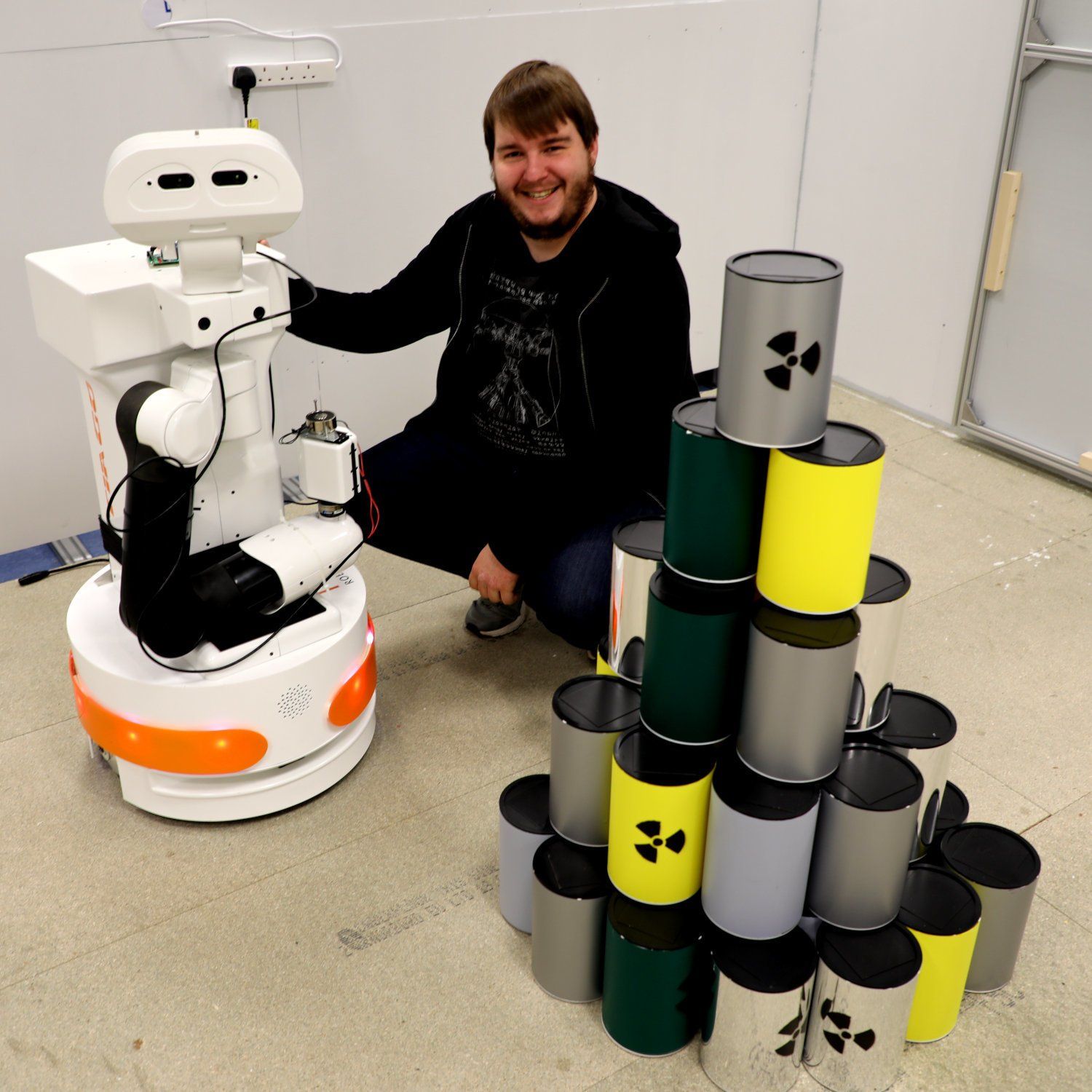

Ambiguous Spatial Language

It might seem counter-intuitive, but humans are usually very ambiguous when they talk to each other: we are usually going first for the simple explanation, and only provide additional details ("repair") when really needed.

Using machine learning, we are trying to teach our robots the same skill: to be ambiguous while speaking, but to know when to provide the right hint to keep things on track. This can for example be used for a robot to describe the configuration of a set of barrels to a human operator in a nuclear facility.

Contact: Séverin Lemaignan, Christopher Wallbridge (University of Plymouth)

Research Projects

DigiTOP

(Digital Toolkit for Optimisation of Operators and Technology in Manufacturing Partnerships)

As manufacturing shifts towards smart factories, with interconnected production systems and automation, EPSRC has funded the £1.9m DigiTOP project to develop a predictive toolkit to optimise productivity and communication between human workers and robots.

Academics at the University of Nottingham, Cranfield University, Loughborough University and Bristol Robotics Laboratory are collaborating to deliver an open-access suite of digital tools to enable the real-time capture and prediction of impact, allowing digital technologies to be optimised for manufacturing system performance. Using new human factors theories and data analytics approaches, tools will be designed to inform human requirements for workload, situation awareness and decision making in digital manufacturing. At the same time, demonstrators will be used to test the implementation of sensing technologies that will capture and evaluate performance change and build predictive models of system performance.

The project will also provide an understanding of the ethical, organisational and social impact of the introduction of digital manufacturing tools and digital sensor-based tools to evaluate work performance in the future workplace.