Centre for Machine Vision

Vision is a key ability in the natural world, and by equipping robots with visual skills, we give them an important tool for understanding, and extracting information from real world environments.

Robotics offers exciting opportunities for extending more traditional lines of computer vision research, as robotic systems are very often active systems, able to interact with, and alter their environments. The ability to interact with a visual scene, means that a robotic system may be able to extract more information from it than a purely passive vision system would.

BBC Radio Somerset Interview

An interview with BBC Somerset exploring the activities of the Centre for Machine Vision, and the new book, "Why You Can't Catch A Rocket To Mars" by Prof. Lyndon Smith.

Current Projects

The majority of the vision research is carried out in the Centre for Machine Vision, which also researches a range of related areas such as medical 3D imaging, vision-based security and industrial inspection.

PhD Opportunities

Centre For Machine Vision at Bristol Robotics Laboratory has been successful in securing funding from SWBio for a new PhD position for a project titled “Vision‐based artificial intelligence and social network analysis for early prediction of disease from cattle behaviours and interactions”. For more information on the project and how to apply please click here.

Deadline:

Midnight, Monday 5 December 2022 for September 2023 start.

There's an opportunity to apply for a funded full-time PhD in the Centre for Machine Vision (CMV), School of Engineering, UWE Bristol for a project titled "Three-dimensional object detection and segmentation in point clouds in the context of aerospace CFD meshing". The studentship will be jointly funded by UWE Bristol and Zenotech Ltd.

You can find out more about this opportunity here.

Deadline:

Tuesday 6 December 2022 for April 2023 start.

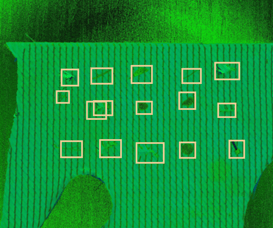

Defect Detection in Composites

Despite their numerous advantages compared to traditional materials, carbon fibre composites still have challenges associated with their efficient and defect-free manufacture. In particular, the black and shiny nature of the composite surfaces make both manual and automated inspection of both raw materials and completed cured parts difficult. . In this research, developments in polarisation imaging technology and a novel moving-light-source imaging system have facilitated new ways for rapid, reliable and cheap inspection of composite parts.

The image below (left) shows a polarisation image of a defected component – that is, an image emphasising the effects on surface structure on the nature of light reflected towards the camera. The defected regions are clearly more visible compared to the standard image (right).

Application of state-of-the-art machine learning techniques (in the form of Deep Learning) allowed our novel software system to automatically detect the defects, as shown by the rectangles.

This research was partly funded by the Digital Engineering Technology & Innovation (DETI) programme in partnership with the National Composites Centre, Bristol UK, PoC3.10 and internal funding from the University of the West of England. DETI is a strategic programme of the West of England Combined Authority, delivered in partnership by the National Composites Centre and industry.

Detection of cutting location on stem of Iceberg Lettuce:A project with UK Agri-Tech Experts to Develop a Salad Saving Robot

The Centre for Machine Vision (CMV) at UWE Bristol have joined forces with Agri-tech and machinery experts at Grimme, Agri-EPI Centre, Image Development Systems, Harper Adams University and two of the UK’s largest lettuce growers, G’s Fresh and PDM Produce, in the new Innovate UK-funded project to develop a robotic solution to automate lettuce harvesting.

Whole head, or iceberg, lettuce is the UK’s most valuable field vegetable crop. Around 99,000 tonnes were harvested in the UK in 2019 with a market value of £178 million. But access to reliable seasonal labour has been an increasing problem, exacerbated by Brexit and Covid 19 restrictions. Early indications are that a commercial robotic solution could reduce lettuce harvesting labour requirements by around 50%.

Figure 1

Figure 2

The project will adapt existing leek harvesting machinery to lift the lettuce clear from the ground and grip it in between pinch belts

as shown in Figure 1. The lettuce’s outer, or ‘wrapper’, leaves will be mechanically removed to expose the stem.

Our work uses machine vision to identify a precise cut point on the stem to separate lettuce head from stem.

As shown in Figure 2, a ResNet is used to detect two key points on the stem . These two key points on the stem will guide and correct the position of the cutting tool along the pinch belts.

Emotional PigBBSRC-funded

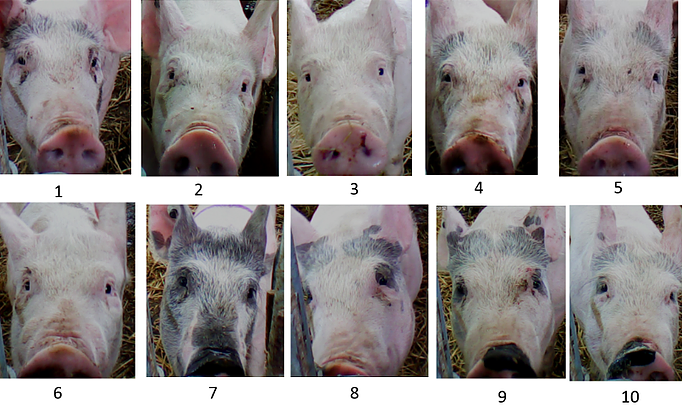

The Machine Vision Centre has teamed up with Animal behaviour and welfare researchers at Scotland’s Rural College (SRUC) to detect different emotional states in pigs using biometrically identified faces. This highly innovative research aims to develop a precision livestock farming tool that can monitor individual pigs and alert farmers to any health and welfare problems.

What we know

Machine vision technology can individually identify pigs using just their face. This is the result of a successful collaboration between UWE and SRUC whereby human facial recognition techniques were adapted to identify individual pigs using only facial images.

The CMV's “Convolutional Neural Network (CNN) model was 97% accurate in discriminating between pigs by using different regions shown by Class Activated Mapping using Grad-CAM. This proof of concept work has demonstrated machine vision offers the potential to realise a low-cost, non-intrusive and practical means to biometrically identify individual animals”.

Pigs are highly expressive and SRUC research have demonstrated that pigs can signal their intentions to other pigs using different facial expressions. There is also evidence of different facial expressions when in pain or under stress. By combining the knowledge of animal behaviourists and machine vision experts the project hopes to develop an innovative on-farm animal health and welfare tool that assesses and records a pig’s condition continuously each day using only the face.

Why do we want to detect emotion?

Understanding animal emotions is an important part of animal welfare research, where detailed behavioural and physiological measures are often used to understand underlying emotional reactions. However when assessing animal welfare under real-world, commercial farm conditions there is far less scrutiny. Assessments of welfare often looks at the environment not the animal - recording what the animal has or doesn’t have (i.e. amount of space, level of water and food provision, etc), as well as some measure of health mostly at a group level. It is difficult to determine what an animal feels about its environment and very rare to ask “questions” of individual animals. “By focussing on the pig’s face we hope to deliver a truly animal-centric welfare assessment technique that could tell us something about the importance each individual animal places on their own particular experiences”, said Dr Emma Baxter, a behaviour and welfare scientist at SRUC. “This allows insight into both short-term emotional reactions and long-term individual “moods” of animals under our care”.

This BBSRC grant (value of £424,647) is further supported by contributions from industry stakeholders (JSR Genetics Ltd and Garth Pig Practice) and precision livestock specialists (Agsense) who recognise the potential of such innovative technology to help with animal health and welfare monitoring, as well as improving economic output. Early identification and resolution of pig health issues results in reduced production costs and improved animal wellbeing.

Currently, on most commercial farms, livestock are visually inspected once a day offering only intermittent and limited information about their health and welfare and only at a group level. Our industry veterinary partners say tools that can identify animals requiring attention "earlier than the most skilled stockperson" would be highly valued especially in larger operations where this is increased mechanisation of farming and the ratio of pigs to stockpersons is increasing. There can be negative reactions to farming practices that are overly mechanised and reliant on computer and robotic technologies but using advances in technology for the good of both the animals and the stockworkers is highly positive and will enhance the image of farming.

Watch this face

Work has begun at SRUC’s Pig Research Centre where they are capturing 3D and 2D facial images of the breeding sow population under various biologically-relevant situations that are likely to result in different emotional states. Images are then processed at UWE’s Centre for Machine Vision where various techniques are being developed to automatically identify different emotions. After validating these techniques the team will develop the technology for on-farm use with commercial partners where individual sows in large herds will be monitored continuously.

This will allow targeted intervention for those who need it and could also give farmers and their prospective customers an idea of how happy their pigs are.

The SoilEssentials KORE Artificial intelligence platformKnowledge Transfer Partnership with Agsenze

A re-trainable smart camera vision system for agriculture. SKAi builds on the InnovateUK feasibility study project of GrassVision which successfully developed a low-cost machine vision system to recognise and precision apply herbicides to broad leaved weeds in grassland.

Program Aims

There is an urgent agronomic (reducing the amount of plant protection products applied to crops), environmental (pollution reduction), economic (lowering the cost of food production) and political (continuing public pressure for a reduction in ag-chem use) need to modernise and update agrochemical applications to crops from the traditional practice of applying a uniform rate across the whole crop to a much more targeted approach. SKAi aims to satisfy this need by building a smart camera and artificial intelligence platform for use by farmers, agronomists and agrochemical applicators. This platform will be integrated into the existing KORE precision agricultural platform to extend its functionality to allow the support of in field smart cameras using image transfer and machine learning.

Using this system, we hope to dramatically reduce the total amount of crop protection products applied to crops in the UK and worldwide.

Project value is £891,051

Automatic Weed Imaging and Analysis

Background

Agricultural techniques for weed management in crop fields involves the wide-scale spraying of herbicides. This is economically and environmentally expensive. An increasing global population requires an increasing crop output, which requires efficient use of agricultural land. By controlling weed growth a higher yield can be maintained. In order to reduce the amount of herbicides used, we need to identify the location and structure of weed clusters in a field.

Precision weeding using machine vision

We worked with Harper-Adams University to detect the locations of out-of-row weed clusters from 2D image and GPS data. Our 3D techniques (Figure 1) enabled us to determine the structure of the weeds from surface information and identify the locations of the crucial parts of the weed.

The result was efficient, targeted weed killing techniques such as precision spraying or heat-treatment. CMV methods for high frame-rate 3D detection of broad-leaf and grass weeds in maize crops enable precise determination of weed patch locations. These are then analysed to find the “meristem” (main growing stem) to within 1-2mm.

We have recently been conducting feasibility studies for the detection and eradication of broadleaved dock (Rumexobtusifolius) in grass crops. Broad-leaved dock is a serious issue as it can survive animal digestion, is deep-rooted and some relevant herbicides can affect the yield of desired crops. Initial results (Figure 2) are promising and we are interested in forming a consortium with a view to exploring this further and developing it into a fully automated robotic system.

Figure 1

Figure 2

Underfloor Insulation

Autonomous Exploration, Data Acquisition, Processing and Visualisation

An Innovate UK funded project in conjunction with Q-Bot Ltd. This project aimed to advance robotics developed and operated by Q-Bot. In specific, autonomously mapping and insulating underfloor environments in buildings.

Mainly older buildings lose a lot of heat via underfloor voids and updrafts. Q-Bot has successfully developed an integrated robotics systems. It is capable of mapping underfloor environments through a semi-automated robotic SLAM system. Insulation is then sprayed semi-automated onto the underside of the floorboards from below.

An example of the insulated floorboards and result of SLAM are shown below:

The aim is to further automate the path-planning process of the system and introduce computer vision methods. In order to automatically classify environment regions into key components that affect the need to insulate, or lack thereof. Examples of such features include walls, boards, pipes, vents and electrical cables.

Polarisation Project

Polarisation is when light waves get constrained in different ways. Our device extracts polarization data from a scene and uses it to obtain bits of geometrical information. It can be used to capture a full polarization image and combine data from two light sources.

Light consists of orthogonal electric and magnetic fields.

Most natural light is unpolarised and so consists of randomly fluctuating field directions. However, a range of natural phenomena (e.g. scattering and reflection) and human inventions (e.g. polarising filters and liquid crystal displays) cause the light to become polarised. That is, the electric and magnetic fields become confined to specific planes or get constrained in other ways. In the field of Computer Vision, both natural and artificially generated polarised light has been utilised for a range of applications including specularity reduction, shape recovery, reflectance analysis, image enhancement and segmentation and separation of reflectance components.

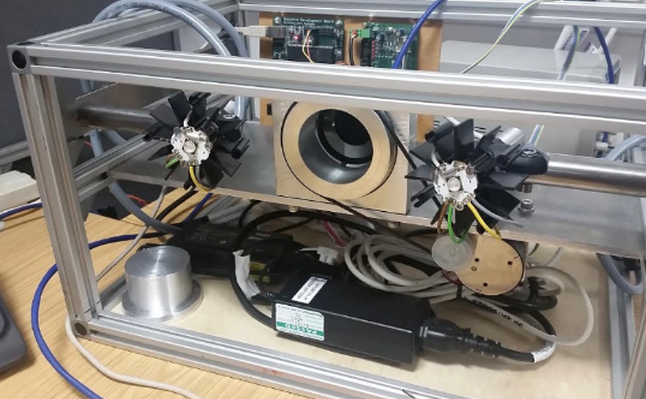

Research into shape-from-polarisation involves extracting polarisation data from a scene and using it to extract geometrical information about a scene. My device can be used to capture a full polarisation image and combine data from two light sources.

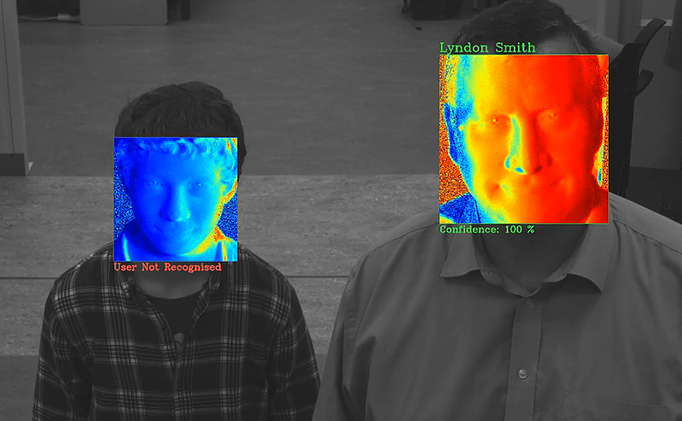

Face Recognition

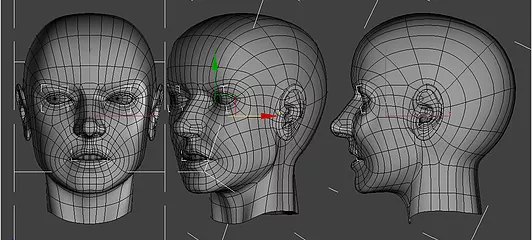

In association with Customer Clever Ltd, our lab are developing an innovative 3D face recognition system that can be used in high-security applications. This can be used in places like retail stores to replace the currently-existing 2D systems and increase security. This project is a KTP (Knowledge Transfer Partnership), that was awarded to us by Innovate UK.

Face recognition exists in other parts of the world, but usually relies on 2D data which can be easily fooled. The secure 3D system has been funded enough to be commercially implemented, and has come from a lab which has been working on face recognition technology for over 10 years.

Although this project is promising, Professor Lyndon Smith said this, "Things which are easy for the human eye to deal with, like changes in background light and people looking in different directions, are big problems for this technology. There’s a difference between making the system work in the laboratory and doing so in a busy supermarket, where there are changes in lighting conditions and people walking around in the background."

Even so, the lab has high hopes for this system, and is hoping for it to be commercially used in the near future.

Pig Face Identification

Current identification of individual animals on farms is limited to RFID tags. These are stressful to the animal and the radio systems themselves often interfere with one another.

In collaboration with SRUC, Manchester University, AB-Agri and funded by SARIC, CMV is developing a system for the identification of individual pigs using their faces instead of RFID tags. This passive and non-invasive technology can accurately identify a pig as they approach the water, allowing measurements to be assigned to individuals (eg weight). This will help classify farm animals so each one is independently recognised by a vision system.

Imaging Plants

In collaboration with Edinburgh University, we have designed and developed a low-cost photometric stereo rig specifically for imaging plants to measure phenotypic traits (the interaction of the plant's genetics with the environment).

By using near-infrared light sources we are able to image the plants 24 hours a day over long periods without interfering with their growth cycles.

3D Cattle Condition Monitoring

The number of British cattle herds is decreasing. Our system quickly and accurately monitors any changes in individual cow condition, weight and mobility. This speeds up the identification of any potential health issues such as lameness, improving the welfare of the cows and the sustainability and profitability of the dairy industry.

This system is being trialed at a dairy farm in Somerset improving herd management.

Window Crack Characterisation

A crack in the glass of a windscreen can eventually lead to needing an entire windscreen replacement (left). To prevent this, AutoGlass U.K. can repair the defect if you send them a photo of the crack via their mobile phone app.

At the CMV, we use machine vision for the crack's segmentation and to get over the dust, dirt and reflections on the glass.

Past Projects

Machine Vision News

Links