Swarm Robotics

Swarm robotics is a new approach to coordinate the behaviours of large number of relatively simple robots in decentralised manner.

Research Lead: Dr. Sabine Hauert

Contact the team here

Background

The swarm robotics group engineers swarms across scales, from small numbers of computational powerful robots that work in teams for applications in warehouses, to large numbers (100+) of tiny robots for outdoor sensing or biomedical applications. Swarm intelligence allows agents to work together through simple local interactions with their neighbours or their environment. The swarm behaviours that emerge from these interactions (e.g. decision making, exploration, collective motion, shape formation, mapping) are typically more than the sum of their parts.

Swarm strategies are either bio-inpsiredf (e.g. ants, bees, cells) or are designed de novo using bio-inspiration.

Associate Professor Sabine Hauert, University of Bristol & BRL, discusses the topic, "Should we worry about Swarm Robotics (or simply rebrand as flocking robots?)".

The Podcast is part of the Living With AI Podcast: Challenges of Living with Artificial Intelligence series. produced by the Trustworthy Autonomous Systems Hub.

Examples of Previous Research Projects

Probabilistic Modelling In Swarm Robotic Systems

This project uses probabilistic modelling technique to understand the impact of individual control parameters on the swarm behaviours, and thus to optimise the controller design.

As the robots in a swarm have only local perception and very limited local communication abilities, one of the challenges in designing swarm robotic systems with desired collective behaviour is to understand the effect of individual behaviour on the group performance.

This project dedicates the research on design and optimisation of interaction rules for a group of foraging robots that try to achieve energy efficiency collectively.

Symbiotic Evolutionary Robot Organisms

(SYMBRION)

This EU FP7 funded project is investigating novel principles of behaviour, adaptation and learning for self-assembling robot "organisms" based on artificial evolution and evolutionary computational approaches.

Investigating the principles of how large swarms of robots can evolve and adapt together into different organisms based on bio-inspired approaches.

The Emergence of Artificial Culture in Robot Societies

A profound question that transcends disciplinary boundaries is "how can culture emerge and evolve as a novel property in groups of social animals?

This is a four year EPSRC funded project with six UK university partners: Abertay-Dundee, Exeter, Leeds Met, Manchester, Warwick and UWE Bristol (project lead).

A profound question that transcends disciplinary boundaries is "how can culture emerge and evolve as a novel property in groups of social animals?"

We can narrow that question by focussing our attention on the very early stages of the emergence and evolution of simple cultural artefacts; the transition, as it were, from nothing recognisable as culture, to something (let us call this proto-culture).

Verifiable Autonomy

In the next 20 years, we expect to see autonomous vehicles, aircraft, robots, devices, swarms, and software, all of which will (and must) be able to make their own decisions without human intervention.

Abstract

Autonomy is surely a core theme of technology in the 21st century. Within 20 years, we expect to see fully autonomous vehicles, aircraft, robots, devices, swarms, and software, all of which will (and must) be able to make their own decisions without direct human intervention. The economic implications are enormous: for example, the global civil unmanned air-vehicle (UAV) market has been estimated to be £6B over the next 10 years, while the world-wide market for robotic systems is expected to exceed $50B by 2025.

This potential is both exciting and frightening. Exciting, in that this technology can allow us to develop systems and tackle tasks well beyond current possibilities. Frightening in that the control of these systems is now taken away from us. How do we know that they will work? How do we know that they are safe? And how can we trust them? All of these are impossible questions for current technology. We cannot say that such systems are safe, will not deliberately try to injure humans, and will always try their best to keep humans safe. Without such guarantees, these new technologies will neither be allowed by regulators nor accepted by the public.

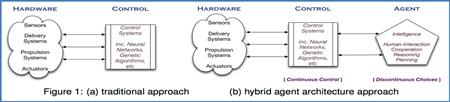

Imagine that we have a generic architecture for autonomous systems such that the choices the system makes can be guaranteed? And these guarantees are backed by strong mathematical proof? If we have such an architecture, upon which our autonomous systems (be they robots, vehicles, or software) can be based, then we can indeed guarantee that our systems never intentionally act dangerously, will endeavour to be safe, and will - as far as possible - act in an ethical and trustworthy way. It is important to note that this is separate from the problem of how accurately the system understands its environment. Due to inaccuracy in modelling the real world, we cannot say that a system will be absolutely safe or will definitely achieve something; instead we can say that it tries to be safe and decides to carry out a task to its best ability. This distinction is crucial: we can only prove that the system never decides to do the wrong thing, we cannot guarantee that accidents will never happen. Consequently, we also need to make an autonomous system judge the quality of its understanding and require it to act taking this into account. We should also verify, by our methods, that the system's choices do not exacerbate any potential safety problems.

Our hypothesis is that by identifying and separating out the high-level decision-making component within autonomous systems, and providing comprehensive formal verification techniques for this, we can indeed directly tackle questions of safety, ethics, legality and reliability. In this project, we build on internationally leading work on agent verification (Fisher), control and learning (Veres), safety and ethics (Winfield), and practical autonomous systems (Veres, Winfield) to advance the underlying verification techniques and so develop a framework allowing us to tackle questions such as the above. In developing autonomous systems for complex and unknown environments, being able to answer such questions is crucial.